This is a draft of an article about session management and caching. It's nearly complete, the main thing missing is a list of some use case descriptions. Apart from that, I'd like to get some feedback on this - so if you find you still have questions after reading this, or you would like specific details included, please leave a comment and tell me so. Eventually this will be published on the main website.

About Sessions

The XPO Session has evolved since XPO version 1. In the first version of XPO, the Session object encapsulated the object cache, the Dictionary, which stores all the metadata XPO has collected about persistent classes in the current application, and the connection. Most importantly, it also handled the process that XPO runs on initialization, where all the various types are recognized and checked against the persistent structures in the database.

In XPO 6.1 we have made substantial changes to this system by extracting a separate data layer. The data layer lies between the connection provider (interface IDataStore) and the Session and its tasks are to handle all the metadata management that was previously part of the Session and to perform the database initialization/checking. The main benefit of this change is that Session creation has now become an extremly lightweight process - in many use cases it's desirable to create new Session instances rapidly and regularly, but with XPO 1 this wasn't really an option because of the overhead that each new Session instance had.

So how should Sessions be used?

As usual, there’s no one-size-fits-all solution to this question. But the recommendation, in a nutshell, is to use a fresh Session instance for each coherent batch of work. In many cases, using a UnitOfWork instead of a generic Session instance will be a very good idea (click here for some information about units of work). The best efficiency of a Session and the object cache it includes can be reached if the algorithms running with a single Session instance are long enough to make good use of the caching of relevant data, but short enough so that data doesn’t have to be reloaded. I hope the paragraphs below explain this statement in as much detail as needed.

The Session as an Identity Map

As was already the case in XPO 1, the Session implements an object cache, which serves the purpose of an Identity Map. The Identity Map is best explained in Martin Fowler's book "Patterns of Enterprise Application Architecture" (summary here), but in short, its task is to make sure that when the same object is part of two separately queried result sets, then the exact same object should be returned - not a clone or a copy of the object. The following test should always pass (assuming there's a Person object in the database with the Name Billy Bott):

Person person1 = session.FindObject<Person>(new BinaryOperator("Name", "Billy Bott"));

Person person2 = session.FindObject<Person>(new BinaryOperator("Name", "Billy Bott"));

Assert.AreSame(person1, person2);

Dim person1 As Person = session1.FindObject(Of Person)(New BinaryOperator("Name", "Billy Bott"))

Dim person2 As Person = session1.FindObject(Of Person)(New BinaryOperator("Name", "Billy Bott"))

Assert.AreSame(person1, person2)

Note: The Session's object cache is not an optional feature, it can't be switched off.

The inner workings of the object cache are really very simple. Every time a query to the database is made, XPO checks for new versions of the relevant objects in the database (using Optimistic Locking, see below for details on this). If the database has more current information than the object cache, the relevant objects are updated in the cache. New objects are created as necessary and added to the cache, and the resulting collection of objects is returned. So if an object exists in the cache already, it is never created a second time and the requirements of the Identity Map are fulfilled.

How current is the object cache?

When copies of data are stored on the client side, the question to ask is obviously "how do we make sure that this data is always as current as necessary?" But while that question is pretty easy to come up with, the answer to it is not as easy - mostly because "as current as necessary" means different things in different projects. XPO implements and allows for a number of different approaches to deal with the problem of cache currency.

- Using a new Session instance creates a new object cache. The shorter your Session instance's lifetime, the more current your object cache content. This is the only approach that doesn't break object cache consistency (for details on object cache consistency, see below) and it's the only generally recommended approach.

- Using Optimistic Locking (again, see below for details), XPO checks the currency of objects in the cache automatically. The following test code shows how automatic updates work:

// Create a test object

using (Session session = new Session( )) {

new Person(session, "Billy Bott").Save( );

}

Session session1 = new Session( );

Session session2 = new Session( );

// Create a collection in session1 and check the content

XPCollection<Person> peopleSession1 = new XPCollection<Person>(session1);

Assert.AreEqual(1, peopleSession1.Count);

Assert.AreEqual("Billy Bott", peopleSession1[0].Name);

// Fetch the test object in session2 and make a change

Person billySession2 = session2.FindObject<Person>(new BinaryOperator("Name", "Billy Bott"));

billySession2.Name = "Billy's new name";

billySession2.Save( );

// Create a new collection in session1 - it will have the change from the other session

// Note that instead of another session, we could create this collection on a

// different client computer with the same outcome.

XPCollection<Person> newPeopleSession1 = new XPCollection<Person>(session1);

Assert.AreEqual(1, newPeopleSession1.Count);

Assert.AreEqual("Billy's new name", newPeopleSession1[0].Name);

// This last test shows the workings of the Identity Map - the object in the "old"

// collection has the same change, because it's actually the same object.

Assert.AreEqual("Billy's new name", peopleSession1[0].Name);

Assert.AreSame(peopleSession1[0], newPeopleSession1[0]);

' Create a test object

Using session1 As Session = New Session

New Person(session1, "Billy Bott").Save

End Using

Dim session1 As New Session

Dim session2 As New Session

' Create a collection in session1 and check the content

Dim peopleSession1 As New XPCollection(Of Person)(session1)

Assert.AreEqual(1, peopleSession1.Count)

Assert.AreEqual("Billy Bott", peopleSession1(0).Name)

' Fetch the test object in session2 and check the content

Dim billySession2 As Person = session2.FindObject(Of Person)(New BinaryOperator("Name", "Billy Bott"))

billySession2.Name = "Billy's new name"

billySession2.Save

' Create a new collection in session1 - it will have the change from the other session

' Note that instead of another session, we could create this collection on a

' different client computer with the same outcome.

Dim newPeopleSession1 As New XPCollection(Of Person)(session1)

Assert.AreEqual(1, newPeopleSession1.Count)

Assert.AreEqual("Billy's new name", newPeopleSession1(0).Name)

' This last test shows the workings of the Identity Map - the object in the "old"

' collection has the same change, because it's actually the same object.

Assert.AreEqual("Billy's new name", peopleSession1(0).Name)

Assert.AreSame(peopleSession1(0), newPeopleSession1(0))

There are several methods that allow the programmer to reload content from the database explicitely - or at least it seems like this is what happens. There's Session.Reload(object), which reloads a single object, and XPBaseObject.Reload(), which has the object call into its session to reload itself. There's an alternate overload Session.Reload(object, forceAggregatesReload), which reloads all aggregated properties in addition to the object itself. And finally, there's XPBaseCollection.Reload(), which reloads all objects in a collection.

To prevent breaking object cache consistency, the Session.DropCache() method is available to drop the complete object cache content at once. Be aware that dropping the object cache completely will invalidate all objects that have previously been loaded through that cache, so you must not continue working with these object instances after dropping the cache – all collections must be reloaded! This is mainly an alternative to the use of multiple sessions, for cases where it's desirable to reuse the same Session instance.

The above code example could be modified like this to show collection reloading:

// Create a test object

using (Session session = new Session( )) {

new Person(session, "Billy Bott").Save( );

}

Session session1 = new Session( );

Session session2 = new Session( );

// Create a collection in session1 and check the content

XPCollection<Person> peopleSession1 = new XPCollection<Person>(session1);

Assert.AreEqual(1, peopleSession1.Count);

Assert.AreEqual("Billy Bott", peopleSession1[0].Name);

// Fetch the test object in session2 and make a change

Person billySession2 = session2.FindObject<Person>(new BinaryOperator("Name", "Billy Bott"));

billySession2.Name = "Billy's new name";

billySession2.Save( );

// The old session1 collection still has the old "version" of the object

Assert.AreEqual("Billy Bott", peopleSession1[0].Name);

// Now reload the session1 collection and check - the change will be there

peopleSession1.Reload();

Assert.AreEqual("Billy's new name", peopleSession1[0].Name);

' Create a test object

Using session1 As Session = New Session

New Person(session1, "Billy Bott").Save

End Using

Dim session1 As New Session

Dim session2 As New Session

' Create a collection in session1 and check the content

Dim peopleSession1 As New XPCollection(Of Person)(session1)

Assert.AreEqual(1, peopleSession1.Count)

Assert.AreEqual("Billy Bott", peopleSession1(0).Name)

' Fetch the test object in session2 and check the content

Dim billySession2 As Person = session2.FindObject(Of Person)(New BinaryOperator("Name", "Billy Bott"))

billySession2.Name = "Billy's new name"

billySession2.Save

'The old session1 collection still has the old "version" of the object

Assert.AreEqual("Billy Bott", peopleSession1(0).Name)

'New reload the session1 collection and check - the change will be there

peopleSession1.Reload

Assert.AreEqual("Billy's new name", peopleSession1(0).Name)

When reloading data selectively, it is important to note that this is not always as explicit as one might think. The Session.Reload(...) methods are actually the only ones that have an immediate and inevitable effect - when they are called, the given object is refreshed from the database under all circumstances. When collections are reloaded, internally the collection is cleared and marked as "not loaded". So when it's accessed the next time, the "normal" data loading algorithm applies and the currency of the objects in the collection depends on the automatic mechanism above, utilizing Optimistic Locking.

Object Cache Consistency

The topic of object cache consistency has been mentioned a number of times in the previous paragraphs - so what do we mean by this? To explain, consider how the object cache in a given Session instance is filled. Objects are loaded, in collections or singly, and they are stored in the object cache. It is possible that objects which are returned in the result set of a specific XPCollection may be of mixed age - some may have been queried a while ago and existed in the object cache since then, while others have been queried and created just now.

On the surface, it appears to be very desirable to have the most current information available, everywhere and at every time. But if we give the matter a little more thought, it becomes clear that an automatic inplace update of objects has a lot of drawbacks of its own. For example, in one part of the application, using data from a global session, one algorithm might be happily doing its work, while in another part, for the same session, a different algorithm suddenly updates data from the database - that might be fatal to the outcome of the first routine, which obviously doesn't expect the data it works with to change suddenly. The whole topic is even more complicated if you imagine UI controls bound to data collections or single objects, and all the countless other situations where application state depends on data. Automatic notification is often not possible, so changes to data catch everybody unawares.

As these explanations try to show, current data is not always the single most valuable target. Sometimes it's much more important to have data that is consistent in itself, where the objects are all of the same "generation" and contain information that forms a complete picture correctly, from the application's point of view. Every partial change to this picture would contort the result.

This is why we talk about object cache consistency. Generally, as soon as you use a single instance of the object cache, i.e. a single Session instance, to do two or more kinds of work, you will fill the object cache with data that might not be logically consistent. Even worse, every time some of this data is refreshed from the database selectively, it's extremely probable that you destroy this consistency. The logical conclusion is simple: one single instance of the object cache, and as a conclusion, one single Session instance, should only ever contain data that is guaranteed to be logically consistent. A good way to be sure of this is to use Session instances liberally, one for each logical "part", each data handling "process" your application performs.

Despite our belief in these general recommendations, we have tried to make XPO 6.1 so flexible in its design that you can make these decisions yourself. We are trying to shed some light on the pros and cons of single vs. multiple session scenarios, as well as the various reloading practices - it's your decision which way your application is going to go.

Variations

Some of you may have noticed that the problem with object cache consistency is very similar to the problems that relational database systems try to solve with isolation levels. We can't directly reuse that idea in XPO, but we have introduced a flag to switch XPO's behavior when changed objects are encountered in the database. This can be used to configure the "isolation" of data in the object cache, and thereby influence object cache consistency.

The flag is accessible as XpoDefault.OptimisticLockingReadBehavior (for the default value) and as Session.OptimisticLockingReadBehavior (for the session instance specific value) and it can have the following values:

| Ignore |

Changed objects are never reloaded. Best object cache consistency. |

| ReloadObjects |

Changed objects are automatically reloaded. |

| ThrowException |

When changed objects are encountered, a LockingException is thrown. |

| Mixed |

Outside of transactions, the behavior is ReloadObjects, inside transactions it's Ignore. |

The default value for this flag is currently "Mixed".

Data Layer Caching

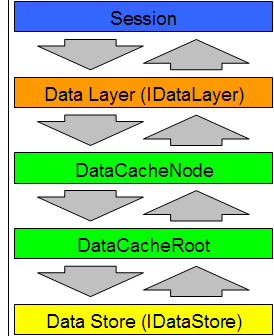

In addition to the object cache described above, XPO also includes functionality for a cache on the data level. This system caches queries and their results as they are being executed on the database server. Two classes must be combined to form a cache structure, DataCacheRoot and DataCacheNode. It's possible to build cache hierarchies out of a single DataCacheRoot instance and any number of DataCacheNode instances - this makes sense when certain parts of an application (or certain applications, thinking of client/server setups) need to use different settings for their data access, such as particularly current data. The minimum setup of the structure needs one DataCacheRoot and one DataCacheNode. The DataCacheNode is the one that actually caches data, the DataCacheRoot keeps some information global to that cache hierarchy.

In addition to the object cache described above, XPO also includes functionality for a cache on the data level. This system caches queries and their results as they are being executed on the database server. Two classes must be combined to form a cache structure, DataCacheRoot and DataCacheNode. It's possible to build cache hierarchies out of a single DataCacheRoot instance and any number of DataCacheNode instances - this makes sense when certain parts of an application (or certain applications, thinking of client/server setups) need to use different settings for their data access, such as particularly current data. The minimum setup of the structure needs one DataCacheRoot and one DataCacheNode. The DataCacheNode is the one that actually caches data, the DataCacheRoot keeps some information global to that cache hierarchy.

The easiest case is this: whenever a query passes the cache that has been executed before, the result from that query is returned to the client immediately, without a roundtrip to the server.

The DataCacheRoot stores information about updates to tables. Every time a DataCacheNode contacts its parent, table update information is pushed in the direction from Root to Node. Obviously these regular contacts between a DataCacheNode and its parent are important to provide for current update information, so in the case where there’s no contact necessary for a longer stretch of time, the MaxCacheLatency comes into operation. This property defines the maximum time that is allowed to pass before a contact to the parent becomes mandatory. So if a DataCacheNode receives a query, it finds a cached result set for this query, and more time than given by MaxCacheLatency has passed since its last parent contact, it will perform a quick request to its parent to retrieve update information.

An important thing to mention is that this system relies on the idea that the cache structure knows about all changes that are being made to the data. So even in a multi-user setup you should make sure that all updates (and selects, obviously) are performed in a way that allows them to be recognized by the cache structure.

For example, a typical client/server setup would expose the interface of the DataCacheRoot (which is called ICacheToCacheCommunicationCore) via Remoting, and use a DataCacheNode on each client. All XPO requests would be routed through the client-side DataCacheNode and through the DataCacheRoot server-side. An elaborate example of this Remoting setup is not subject of this article, but look out for a future article on Remoting – for the time being, there is a pair of samples called “CacheRoot” and “CacheNode” that comes with the XPO distribution.

If, for any reason, there are changes in the database that have been made without going through the cache structure, there are two utility methods that can be helpful. These are NotifyDirtyTables(…) (to inform the cache about specific changes) and CatchUp() (to synchronize the cache completely).

This test code shows how a data cache with two nodes can be constructed, where caching takes place and where the DataCacheRoot stops caching because of updates. This sample uses an InMemoryDataStore, which could of course be replaced by any other connection provider.

// Create the data store and a DataCacheRoot

InMemoryDataStore dataStore =

new InMemoryDataStore(new DataSet( ), AutoCreateOption.SchemaOnly);

DataCacheRoot cacheRoot = new DataCacheRoot(dataStore);

// Create two DataCacheNodes for the same DataCacheRoot

DataCacheNode cacheNode1 = new DataCacheNode(cacheRoot);

DataCacheNode cacheNode2 = new DataCacheNode(cacheRoot);

// Create two data layers and two sessions

SimpleDataLayer dataLayer1 = new SimpleDataLayer(cacheNode1);

SimpleDataLayer dataLayer2 = new SimpleDataLayer(cacheNode2);

Session session1 = new Session(dataLayer1);

Session session2 = new Session(dataLayer2);

// Create an object in session1

Person billySession1 = new Person(session1, "Billy Bott");

billySession1.Save( );

// Load and check that object in session2

XPCollection<Person> session2Collection = new XPCollection<Person>(session2);

Assert.AreEqual("Billy Bott", session2Collection[0].Name);

// Make a change to that object in session1

billySession1.Name = "Billy's new name";

billySession1.Save( );

// Reload the session2 collection. The DataCacheNode returns the result

// directly, so the change is not recognized. For the fun of it, do this

// several times. This whole block doesn't query the database at all.

for (int i = 0; i < 5; i++) {

session2Collection.Reload( );

Assert.AreEqual("Billy Bott", session2Collection[0].Name);

}

// Do something (anything) in session2. This makes the cacheNode2

// contact the cacheRoot and information about the updated data in

// the Person table is passed on to cacheNode2.

new DerivedPerson(session2).Save( );

// Reload the session2 collection again, and now the change is recognized.

// In this case, the query goes through to the database.

session2Collection.Reload( );

Assert.AreEqual("Billy's new name", session2Collection[0].Name);

' Create the data store and a DataCacheRoot

Dim dataStore As New InMemoryDataStore(New DataSet, AutoCreateOption.SchemaOnly)

Dim cacheRoot As New DataCacheRoot(dataStore)

' Create two DataCacheNodes for the same DataCacheRoot

Dim cacheNode1 As New DataCacheNode(root1)

Dim cacheNode2 As New DataCacheNode(root1)

' Create two data layers and two sessions

Dim dataLayer1 As New SimpleDataLayer(cacheNode1)

Dim dataLayer2 As New SimpleDataLayer(cacheNode2)

Dim session1 As New Session(dataLayer1)

Dim session2 As New Session(dataLayer2)

' Create an object in session1

Dim billySession1 As New Person(session1, "Billy Bott")

billySession1.Save

' Load and check that object in session2

Dim session2Collection As New XPCollection(Of Person)(session2)

Assert.AreEqual("Billy Bott", collection1(0).Name)

' Make a change to that object in session1

billySession1.Name = "Billy's new name"

billySession1.Save

' Reload the session2 collection. The DataCacheNode returns the result

' directly, so the change is not recognized. For the fun of it, do this

' several times. This whole block doesn't query the database at all.

Dim i As Integer = 0

Do While (i < 5)

session2Collection.Reload

Assert.AreEqual("Billy Bott", session2Collection(0).Name)

num1 += 1

Loop

' Do something (anything) in session2. This makes the cacheNode2

' contact the cacheRoot and information about the updated data in

' the Person table is passed on to cacheNode2.

New DerivedPerson(session2).Save

' Reload the session2 collection again, and now the change is recognized.

' In this case, the query goes through to the database.

session2Collection.Reload

Assert.AreEqual("Billy's new name", session2Collection(0).Name)

For more information, refer to the online documentation: Cached Data Store.

Optimistic Locking

The primary purpose of Optimistic Locking is to prevent multiple clients from making modifications to the same object in the database. This is made possible by a field, by default called OptimisticLockField, that XPO adds to all persistent class tables. When objects are read from the database, the optimistic locking field is read together with the object content and stored. When a modification is made to an object in the database, the optimistic locking field is first checked and if it has been changed in the meantime, XPO throws an exception. If everything is okay, the modification is written to the database and the optimistic locking field is updated - incremented normally, as this is an integer field by default.

As you have seen in various places in this article, the Optimistic Locking functionality accounts for a number of additional features in XPO. It is possible to switch off Optimistic Locking by setting the Session.LockingOption property to LockingOption.None instead of the default LockingOption.OptimisticLocking. But be aware that by doing this, you will not only lose the change detection functionality described in the previous paragraph, but also the most of the selective reloading functionality - the only things that will still work are the XPBaseObject.Reload() and the Session.DropCache() methods.

Free DevExpress Products - Get Your Copy Today

The following free DevExpress product offers remain available. Should you have any questions about the free offers below, please submit a ticket via the

DevExpress Support Center at your convenience. We'll be happy to follow-up.