As you may already know, we are evaluating the importance of ETL data processing among our users and considering ways in which to improve user experiences for common ETL (extract, transform, load) processes within .NET apps. To help us finalize our future plans, please take a moment to review this post and submit your feedback to us.

Important Note: This blog post is conceptual in nature - we have yet to produce a working ETL prototype. Accordingly, all screenshots and code snippets are mere mockups.

Common ETL Scenarios

Please estimate the relevance of the following TOP 3 user stories – user stories that we selected after analyzing hundreds of user responses in our initial survey.

User Story #1: Aggregate Data from Multiple Data Sources Using a Web Service (Web App)

Scenario/Need

A Web developer creates a backend that prepares data in a format required by the client JavaScript framework. Input data is collected from multiple web services in JSON format. Once collected, data must be consolidated and grouped.

Our ETL Solution Ideas

- Create and deploy an ETL model in code.

You should use our ETL API to write the model in code and pass it to EtlExecutor

Pseudo-code:

[ApiController]

[Route("api/[controller]")]

public class OrdersController : ControllerBase {

[HttpGet]

public IEnumerable<object> GetOrders() {

var sqlNode1 = new SqlNode() {

ConnectionString = "localdatabaseconnectionstring",

Sql = "select * from employees"

};

var calculatedColumn = new CalculatedColumn(sqlNode1) {

ColumnName = "FullName",

Expression = "[FirstName] + [SecondName]"

};

var jsonNode2 = new JsonNode() {

Uri = "https://sample.com/api/orders"

};

var parseDate = new ParseDateNode(jsonNode2) {

ColumnName = "Date",

Format = "yyyy-MM-dd HH:mm"

};

var filterNode = new FilterNode(parseDate) {

FilterString = "IsToday([Date])"

};

var joinNode = new JoinNode(calculatedColumn, filterNode) {

SourceColumn = "id",

TargetColumn = "employeeId"

};

var groupByNode = new GroupByNode(joinNode) {

GroupBy = new string[] { "FullName" },

SummaryList = new SummaryInfo[] {

new SummaryInfo() { Column = "Price", Summary = "Sum", NewColumnName = "TotalSales"},

new SummaryInfo() { Column = "Price", Summary = "Max", NewColumnName = "MaxSales"}

}

};

var renameColumn = new RenameColumn(groupByNode) {

OldName = "FullName",

NewName = "Employee name"

};

var outputJsonNode = new OutputJsonNode(renameColumn) {

Indent = false

};

var executor = new EtlExecutor(Mode.Debug);

return executor.Run(outputJsonNode);

}

}

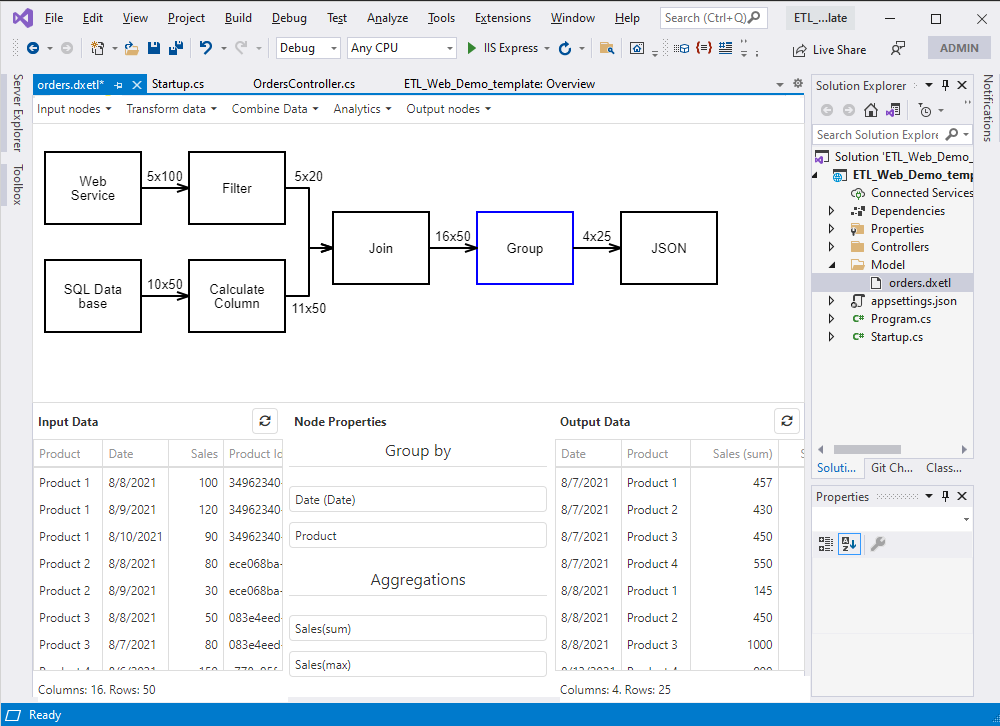

- Create and deploy an ETL model via a visual editor.

You can use the ‘Add New Item’ dialog in Visual Studio to create the new ETL model (“Devexpress ETL”) and customize it with a visual editor. See “Workflow Mockup” in the Technical Design section for screenshots.

You can then attach a pre-built ETL model to the EtlExecutor class in a controller class.

Pseudo-code:

[ApiController]

[Route("api/[controller]")]

public class OrdersController : ControllerBase {

[HttpGet]

public IEnumerable<object> GetOrders() {

var executor = new EtlExecutor(Mode.Debug);

return executor.Run("./Model/orders.dxetl");

}

}

User Story #2: Pre-process Data from External Databases/File Sources and Analyze Information via UI Components (Desktop App)

Scenario/Need

A desktop application developer implements an analytics module in a WinForms application. Source data is loaded from a SQL database and Excel files. Data must be consolidated and pre-processed before it is passed to the UI component (such as a Data Grid control).

Our ETL Solution Ideas

- Use the ETL API to write the model in code.

Pseudo-code:

private DataGridView dataGridView1 = new DataGridView();

...

var sqlNode1 = new SqlNode() {

ConnectionString = "localdatabaseconnectionstring",

Sql = "select * from employees"

};

var jsonNode2 = new JsonNode() {

Uri = "https://sample.com/api/orders"

};

var filterNode = new FilterNode(jsonNode2) {

FilterString = "IsToday([Date])"

};

var joinNode = new JoinNode(sqlNode1, filterNode) {

SourceColumn = "id",

TargetColumn = "employeeId"

};

var groupByNode = new GroupByNode(joinNode) {

GroupBy = new string[] { "FullName" },

SummaryList = new SummaryInfo[] {

new SummaryInfo() { Column = "Price", Summary = "Sum", NewColumnName = "TotalSales"},

new SummaryInfo() { Column = "Price", Summary = "Max", NewColumnName = "MaxSales"}

}

};

dataGridView1.DataSource = new ETLDataSource(groupByNode);

- Create and deploy an ETL model via a visual editor.

You can use the ‘Add New Item’ dialog in Visual Studio to create the new ETL model (“Devexpress ETL”) and customize it with a visual editor. See “Workflow Mockup” in the Technical Design section for screenshots.

You can then attach a pre-built ETL model to the Grid control using the ETL Data Source from the Create a New Data Source dialog.

User Story #3: Set up a Data Warehouse with Transformation Steps in Code

Scenario/Need

A developer creates an application that collects data from multiple data sources, transforms data (join, group, etc) and sends the resulting dataset to a database or a Warehouse.

Our ETL Solution Idea

- Create an ETL model in code.

Pseudo-code:

var sqlNode1 = new SqlNode() {

ConnectionString = "localdatabaseconnectionstring",

Sql = "select * from employees"

};

var calculatedColumn = new CalculatedColumn(sqlNode1) {

ColumnName = "FullName",

Expression = "[FirstName] + [SecondName]"

};

var jsonNode2 = new JsonNode() {

Uri = "https://sample.com/api/orders"

};

var parseDate = new ParseDateNode(jsonNode2) {

ColumnName = "Date",

Format = "yyyy-MM-dd HH:mm"

};

var filterNode = new FilterNode(parseDate) {

FilterString = "IsToday([Date])"

};

var joinNode = new JoinNode(calculatedColumn, filterNode) {

SourceColumn = "id",

TargetColumn = "employeeId"

};

var groupByNode = new GroupByNode(joinNode) {

GroupBy = new string[] { "FullName" },

SummaryList = new SummaryInfo[] {

new SummaryInfo() { Column = "Price", Summary = "Sum", NewColumnName = "TotalSales"},

new SummaryInfo() { Column = "Price", Summary = "Max", NewColumnName = "MaxSales"}

}

};

var renameColumn = new RenameColumn(groupByNode) {

OldName = "FullName",

NewName = "Employee name"

};

var outputJsonNode = new OutputJsonNode(renameColumn) {

Indent = false

};

var executor = new EtlExecutor(Mode.Debug);

var jsonString = executor.Run(outputJsonNode);

Options: Fine-tune an existing transformation function/parameter or create a custom transformation.

You can then create fully custom transformation steps:

class CustomStep: IEtlNode {

IDataVerctors Eval(IDataVerctors dataFlow, CustomStepOptions option) {

dataFlow.Add(option.NewColumnName, CustomTransformation(dataFlow[option.TargetColumn]));

return dataFlow;

}

}

You can also customize existing transformation steps:

public class CustomSummary : ICustomSummary {

decimal accumulator = decimal.MaxValue;

void AddValue(decimal value) {

if(accumulator < value) {

accumulator = value;

}

}

decimal GetResult() {

return accumulator;

}

}

var groupByNode = new GroupByNode(joinNode) {

GroupBy = new string[] { "FullName" },

SummaryList = new SummaryInfo[] {

new SummaryInfo() { Column = "Price", Summary = new CustomSummary(), NewColumnName = "TotalSales"},

}

};

Place a breakpoint near the required operation and run the application in debug mode to examine the current variable state. Optional: examine the model’s diagram and execute specific operations (steps).

Access any application’s .NET entity when debugging the ETL model. To apply changes to the ETL model, rebuild your application (no in-place modifications in debug mode).

Technical Design of Our ETL Solutions (Survey)

Based on your data processing/business requirements, please tell us what you consider most relevant (you can also leave a comment if necessary):

Workflow Mockup - Create and deploy an ETL Model

- Use the

Add New Item dialog in Visual Studio to add a new ETL model (“Devexpress ETL”).

- Define the ETL model via a visual editor.

· Add the first step (in this example, add a Web Service data source)

· Add more steps based on resulting data schema

- Write the EtlRunner code in controller / Bind a component to the data source.

Free DevExpress Products - Get Your Copy Today

The following free DevExpress product offers remain available. Should you have any questions about the free offers below, please submit a ticket via the

DevExpress Support Center at your convenience. We'll be happy to follow-up.